Building Real-Time Log Anomaly Detection Models with AWS

Question

You work for the information security department of a major corporation.

You have been asked to build a solution that detects web application log anomalies to protect your organization from fraudulent activity.

The system needs to have near-real-time updates to the model where log entry data points dynamically change the underlying model as the log files are updated.

Which AWS service component do you use to implement the best algorithm based on these requirements?

Answers

Explanations

Click on the arrows to vote for the correct answer

A. B. C. D.Answer: C.

Option Ais incorrect because SageMaker Random Cut Forest is best used for large batch data sets where you don't need to update the model frequently (See AWS Kinesis Data Analytics documentation: https://docs.aws.amazon.com/kinesisanalytics/latest/sqlref/sqlrf-random-cut-forest.html).

Answer B is incorrect because the Naive Bayes Classifier is used to find independent data points.

The Kinesis Data Streams service does not have machine learning algorithm capabilities (See the AWS Kinesis Streams developer documentation: https://docs.aws.amazon.com/streams/latest/dev/introduction.html).

Option C is correct.

The Kinesis Data Analytics Random Cut Forest algorithm works really well for near-real-time updates to your model (See the AWS Kinesis Data Analytics documentation: https://docs.aws.amazon.com/kinesisanalytics/latest/sqlref/sqlrf-random-cut-forest.html).

Option D is incorrect because Kinesis Data Analytics provides a hotspots function that detects higher than normal activity using the distance between a hotspot and its nearest neighbor.

But it does not provide ML model update capabilities (See AWS Kinesis Data Analytics documentation: https://docs.aws.amazon.com/kinesisanalytics/latest/sqlref/sqlrf-hotspots.html).

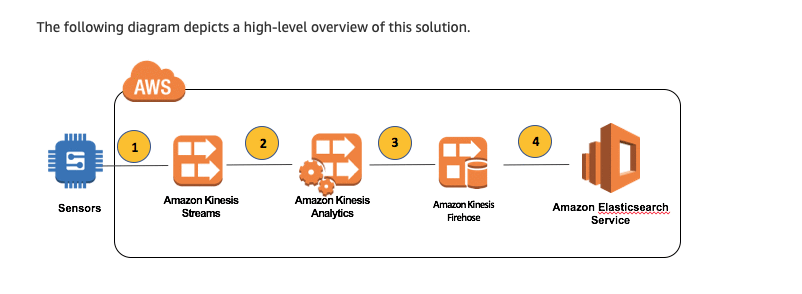

Diagram:

Here is a screenshot from the AWS Big Data blog:

Reference:

For an example, please see the AWS Big Data blog post titled Perform Near Real-time Analytics on Streaming Data with Amazon Kinesis and Amazon Elasticsearch Service: https://aws.amazon.com/blogs/big-data/perform-near-real-time-analytics-on-streaming-data-with-amazon-kinesis-and-amazon-elasticsearch-service/) for a complete description of the use of Kinesis Data Analytics and the random cut forest algorithm.

The most appropriate AWS service component for the given requirements is SageMaker Random Cut Forest (RCF), so the answer is A.

SageMaker is a managed service that helps data scientists and developers build, train, and deploy machine learning models at scale. It provides a comprehensive set of tools and services for end-to-end machine learning workflows.

Random Cut Forest (RCF) is a popular algorithm for anomaly detection in high-dimensional data. It is well-suited for detecting anomalies in log files, as it can handle data with a large number of features and can detect anomalies in near-real-time. The algorithm works by constructing a forest of randomly generated trees, where each tree is a set of recursive binary splits on the data. The algorithm then identifies anomalies as data points that have a shorter average path length in the tree forest.

Kinesis Data Streams is a service that allows real-time streaming data processing, and Kinesis Data Analytics is a managed service for analyzing streaming data with SQL or Java. While Kinesis Data Streams and Kinesis Data Analytics could be used for real-time processing of the log files, neither of the two services provides an anomaly detection algorithm out-of-the-box that fits the requirements of the use case. Kinesis Data Streams Naive Bayes Classifier and Kinesis Data Analytics Nearest Neighbor are not the best algorithms for detecting anomalies in log files.

Therefore, SageMaker Random Cut Forest is the best option for detecting web application log anomalies in near-real-time while dynamically updating the underlying model.