Minimum Configuration for Redshift SSD Storage

Question

Currently, a company uses Redshift to store its analyzed data.

They need to configure the Redshift cluster for a demo.

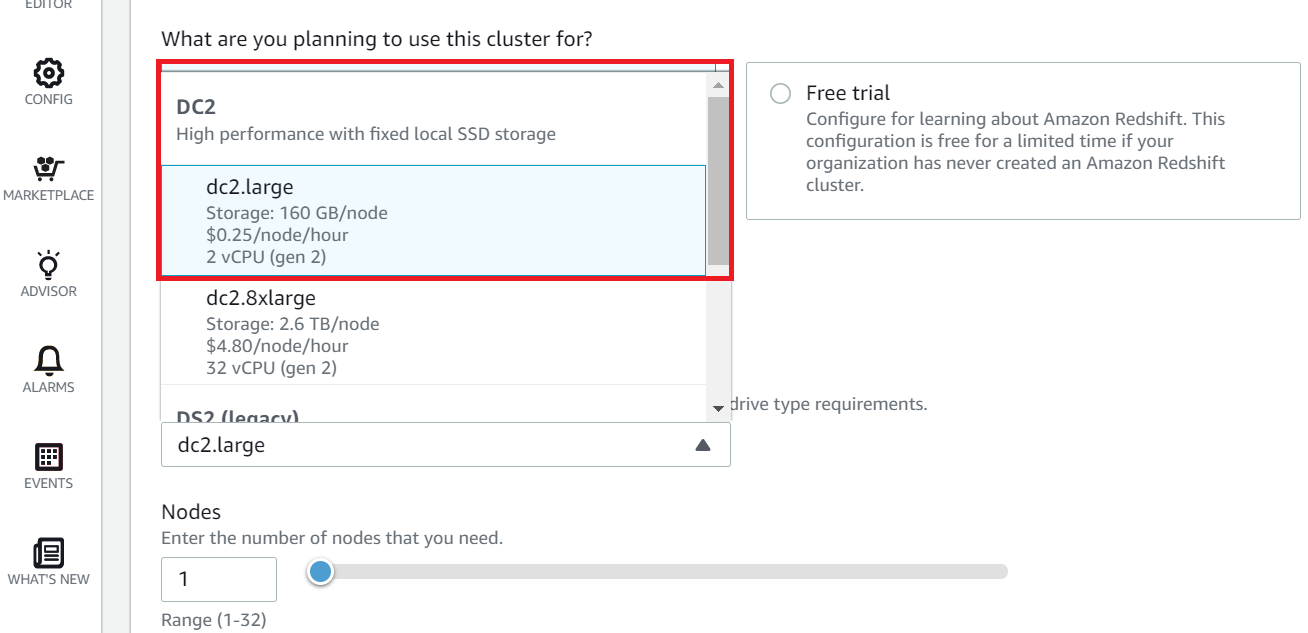

What would be the minimum configuration that a user can choose for an SSD storage using Redshift in the console?

Answers

Explanations

Click on the arrows to vote for the correct answer

A. B. C. D.Answer - D.

For more information on Redshift, please refer to the below URL-

https://docs.aws.amazon.com/redshift/latest/gsg/rs-gsg-launch-sample-cluster.html

Redshift is a data warehousing service provided by AWS that enables you to store and analyze large amounts of data in a scalable and cost-effective manner. When configuring a Redshift cluster, you can choose between two types of storage: SSD and HDD. SSD storage provides faster performance compared to HDD storage, but it is also more expensive.

To determine the minimum configuration for an SSD storage using Redshift, you need to consider the amount of data that needs to be stored and the performance requirements for the demo. The answer to this question depends on the size of the data and the query performance required.

Option A, which suggests configuring three nodes with 320GB each, may not be sufficient for large data sets. Option B, which suggests configuring a single node with 320GB storage, may not be able to deliver the performance required for the demo.

Option C, which suggests configuring two nodes with 128TB each, seems excessive for a demo, as this would be suitable for large-scale production workloads with petabytes of data.

Option D, which suggests configuring a single node with 160GB of storage, is the most reasonable configuration for a demo, assuming that the data set is not very large and the query performance requirements are not very high.

In summary, the minimum configuration for SSD storage using Redshift for a demo would be one node with 160GB of storage, which is Option D.