Data Pipeline for Sentiment Analysis with AWS Services

Question

You work for a startup e-commerce site that sells various consumer products.

Your company has just launched its e-commerce website.

The site provides the capability for your users to rate their purchases and the products they have purchased from your e-commerce site.

You would like to use the review data to build a recommender machine learning model. Since your e-commerce site is very new, you don't yet have a very large review dataset to use for your recommendation model.

You have decided to use the Amazon Customer Reviews dataset from the AWS website as a first data source for your machine learning model.

Since your website sells similar products to the products sold on Amazon, you will use the Amazon Customer Reviews dataset as the basis for your initial training runs of your model.

Once you have enough data from your own e-commerce site, you'll use that data. Your goal is to perform sentiment analysis on the review dataset to create your own dataset that will be the source used for your recommender machine learning model.

Which set of AWS services would you use to build your data pipeline to produce your sentiment dataset for use by your SageMaker model?

Answers

Explanations

Click on the arrows to vote for the correct answer

A. B. C. D.Answer: A.

Option A is correct.

The Amazon Customer Reviews dataset is stored on S3

You can use an AWS Glue ETL job to read the reviews from the Amazon dataset.

The ETL job calls Comprehend for each review to get the sentiment for that review.

The ETL job stores the sentiment enriched review data onto another S3 bucket in your account.

Your SageMaker model uses the S3 bucket in your account as its dataset source for training your recommender model.

Option B is incorrect.

This option has unnecessary steps.

Specifically, you don't need Athena and QuickSite to produce your sentiment enriched dataset for your machine learning model.

Option C is incorrect.

The option uses Kinesis Data Firehose unnecessarily.

The Amazon Customer Reviews dataset is stored on S3

There is no need to stream the data when you can simply read it using an ETL job.

If you used Kinesis Data Firehose to stream the data, you would have to write a lambda function to call Comprehend for each streamed review data row.

Option D is incorrect.

The option uses Kinesis Data Firehose unnecessarily.

The Amazon Customer Reviews dataset is stored on S3

There is no need to stream the data when you can simply read it using an ETL job.

That being said, this option does correctly combine Kinesis Data Firehose and lambda.

However, it lacks the Comprehend service.

You would have to write your own sentiment analysis in your lambda function.

Reference:

Please see the data repository titled Registry of Open Data on AWS, the AWS Machine Learning blog titled How to scale sentiment analysis using Amazon Comprehend, AWS Glue and Amazon Athena, and the data set titled Amazon Customer Reviews Dataset.

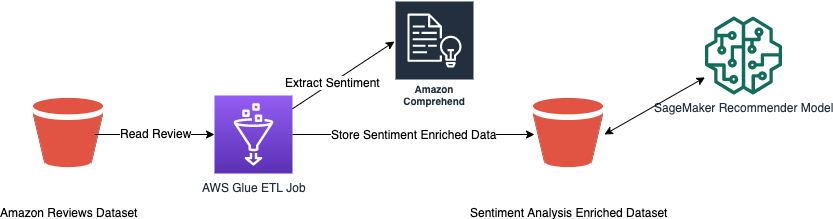

Here is a diagram of the proposed solution:

To build a data pipeline that produces a sentiment dataset for use by a SageMaker model, we need to perform sentiment analysis on the review dataset. We can use a combination of AWS services to build this data pipeline. The recommended set of AWS services to build this data pipeline is as follows:

Option A:

S3 -> AWS Glue ETL -> Comprehend -> S3 -> SageMaker

Here is a detailed explanation of each step:

S3: The first step is to store the Amazon Customer Reviews dataset and any other data you collect from your e-commerce site in S3. S3 is a highly scalable, secure, and durable object storage service that can store and retrieve data from anywhere on the web.

AWS Glue ETL: The next step is to use AWS Glue ETL (Extract, Transform, Load) to prepare the data for analysis. AWS Glue ETL is a fully managed extract, transform, and load service that makes it easy to move data between data stores. In this step, we can use AWS Glue ETL to clean and transform the data to prepare it for analysis.

Comprehend: The next step is to use Amazon Comprehend to perform sentiment analysis on the data. Amazon Comprehend is a natural language processing (NLP) service that uses machine learning to find insights and relationships in text. In this step, we can use Amazon Comprehend to extract sentiment from the reviews dataset.

S3: The next step is to store the sentiment data in S3. This will allow us to access the data later and use it to train our SageMaker model.

SageMaker: The final step is to use Amazon SageMaker to build a recommender machine learning model. Amazon SageMaker is a fully-managed service that provides every developer and data scientist with the ability to build, train, and deploy machine learning models quickly.

Option B:

S3 -> AWS Glue ETL -> Comprehend -> S3 -> Athena -> QuickSight -> SageMaker

This option is similar to Option A, with the addition of two extra services, Athena and QuickSight.

Athena: The first additional service is Athena, which is an interactive query service that allows us to analyze data in S3 using standard SQL. In this step, we can use Athena to query the sentiment data stored in S3 and perform further analysis.

QuickSight: The second additional service is QuickSight, which is a cloud-based business intelligence (BI) service that allows us to create and publish interactive dashboards. In this step, we can use QuickSight to create visualizations of the sentiment data to gain further insights.

Option C:

S3 -> Kinesis Data Firehose -> Comprehend -> S3 -> SageMaker

This option uses Kinesis Data Firehose to stream the data from S3 to Amazon Comprehend for sentiment analysis. Kinesis Data Firehose is a fully managed service for delivering real-time streaming data to destinations such as S3, Redshift, and Elasticsearch.

Option D:

S3 -> Kinesis Data Firehose -> Lambda -> S3 -> SageMaker

This option uses a Lambda function to process the data before sending it to Amazon Comprehend for sentiment analysis. Lambda is a compute service that runs code in response to events and automatically manages the compute resources for us.

In summary, the recommended set of AWS services to build a data pipeline that produces a sentiment dataset for use by a SageMaker model is Option A: S3 -> AWS Glue ETL -> Comprehend -> S3 -> SageMaker. This option allows us to store the data in S3, prepare it for analysis using AWS Glue ETL,