Question 13 of 258 from exam MLS-C01: AWS Certified Machine Learning - Specialty

Question

You work for a mining company in their machine learning department.

You and your team are working on a model to predict the minimum depth to drill to find various mineral deposits.

You are building a model based on the XGBoost algorithm.

Your team is at the stage where you are running various models based on different hyperparameters to find the best hyperparameter settings.

Because of the complexity of the problem, you may have to run hundreds or even thousands of hyperparameter tuning jobs to get the best result. Your machine learning pipeline also includes a batch transform step to be executed after every hyperparameter tuning job.

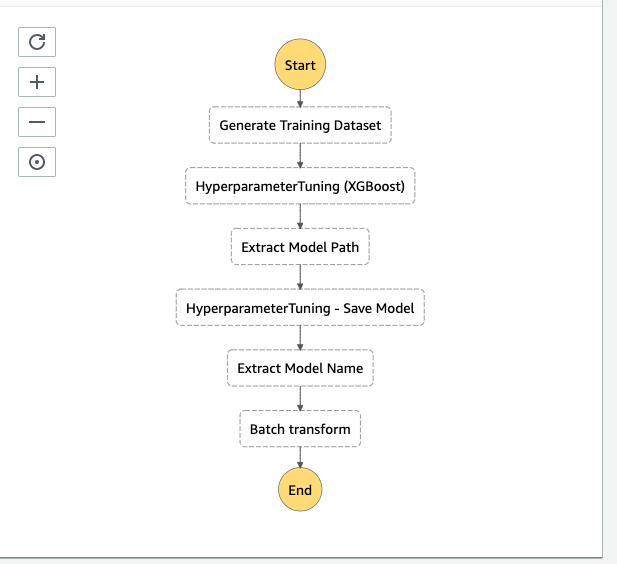

Your team lead has suggested that you use the Amazon Step Functions SageMaker integration capability to automate the execution of your many hyperparameter tuning jobs.

You have set up your Step Functions environment, and you have configured it as such: You have written the following JSON-based Amazon States Language (ASL) for your State Machine (partial listing): { "StartAt": "Generate Training Dataset", "States": { "Generate Training Dataset": { "Resource": "

Answers

Explanations

Click on the arrows to vote for the correct answer

A. B. C. D. E. F. G.Answers: D, F.

Option A is incorrect.

The RMSE metric acronym stands for Root Mean Square Error, not Relative Mean Square Error.

Option B is incorrect.

The Gamma parameter defines the minimum loss reduction used to partition leaf nodes of the tree within the algorithm.

This parameter is not used as a regression evaluation objective.

Option C is incorrect.

The Alpha parameter defines regularization terms on weights within the algorithm.

This parameter is not used as a regression evaluation objective.

Option D is correct.

Your code specifies the RMSE metric as the objective on which to evaluate the tuning model run.

The RMSE acronym stands for Root Mean Square Error.

Option E is incorrect.

Your code specifies the RMSE metric as the objective on which to evaluate the tuning model run.

The RMSE acronym stands for Root Mean Square Error, not Mean Square Error.

Option F is correct.

As the value of the alpha parameter increases, it makes the model more conservative.

Option G is incorrect.

As the value of the alpha parameter increases, it makes the model more conservative, not less conservative.

Option H is incorrect.

As the value of the alpha parameter increases, it makes the model more conservative.

It does not make the model gain precision while sacrificing accuracy.

Reference:

Please see the Amazon announcement titled Amazon SageMaker Announces New Machine Learning capabilities for Orchestration, Experimentation and Collaboration, the AWS Step Functions developer guide titled Manage Amazon SageMaker with Step Functions, the Amazon SageMaker developer guide titled Tune an XGBoost Model, and the XGBoost docs page titled XGBoost Parameters.